*Equal contribution, †Equal advising

Arxiv, 2026

Most locomotion methods for humanoid robots focus on leg-based gaits, yet natural bipeds frequently rely on hands, knees, and elbows to establish additional contacts for stability and support in complex environments. This paper introduces Locomotion Beyond Feet, a comprehensive system for whole-body humanoid locomotion across extremely challenging terrains, including low-clearance spaces under chairs, knee-high walls, knee-high platforms, and steep ascending and descending stairs.

*Equal contribution, †Equal advising

CoRL, 2025

Simulation-based reinforcement learning (RL) has significantly advanced humanoid locomotion tasks, yet direct real-world RL from scratch or starting from pretrained policies remains rare, limiting the full potential of humanoid robots. Real-world training, despite being crucial for overcoming the sim-to-real gap, faces substantial challenges related to safety, reward design, and learning efficiency. To address these limitations, we propose Robot-Trains-Robot (RTR), a novel framework where a robotic arm teacher actively supports and guides a humanoid student.

*Equal contribution, †Equal advising

CoRL, 2025

Learning-based robotics research driven by data demands a new approach to robot hardware design-one that serves as both a platform for policy execution and a tool for embodied data collection to train policies. We introduce ToddlerBot, a low-cost, open-source humanoid robot platform designed for scalable policy learning and research in robotics and AI.

Science Robotics, 2025

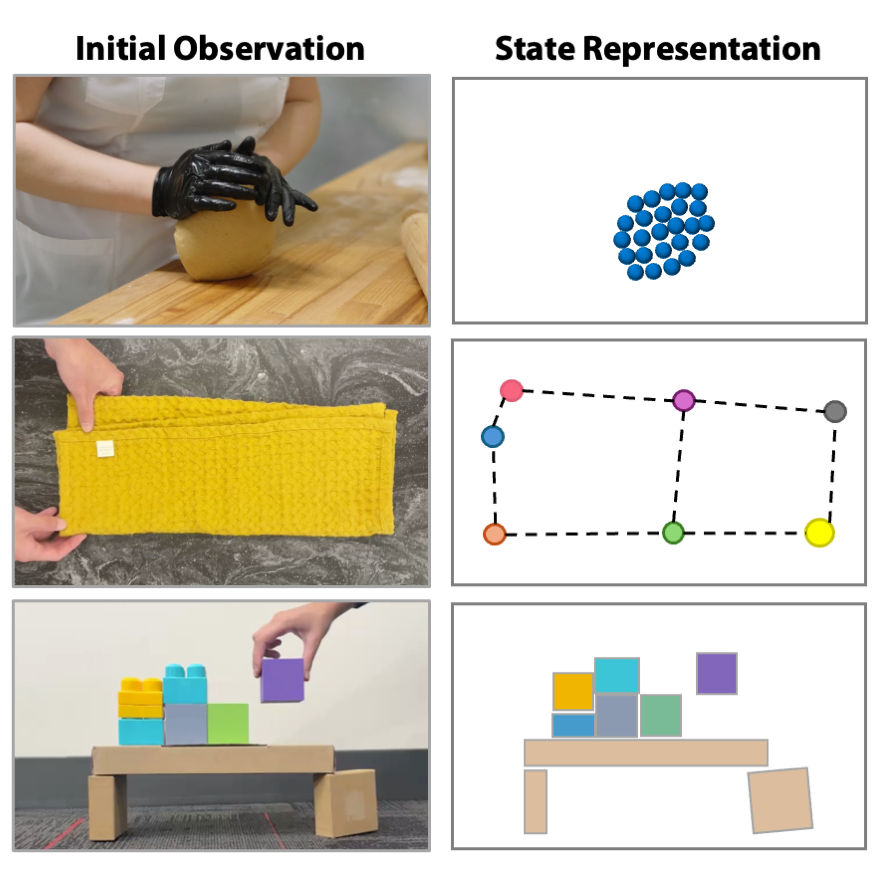

Dynamics models that predict the effects of physical interactions are essential for planning and control in robotic manipulation. Although models based on physical principles often generalize well, they typically require full-state information, which can be difficult or impossible to extract from perception data in complex, real-world scenarios. Learning-based dynamics models provide an alternative by deriving state transition functions purely from perceived interaction data, enabling the capture of complex, hard-to-model factors and predictive uncertainty and accelerating simulations that are often too slow for real-time control.

*Equal contribution

SIGGRAPH Asia, 2024

Piano playing requires agile, precise, and coordinated hand control that stretches the limits of dexterity. Hand motion models with the sophistication to accurately recreate piano playing have a wide range of applications in character animation, embodied AI, biomechanics, and VR/AR. In this paper, we construct a first-of-its-kind large-scale dataset that contains approximately 10 hours of 3D hand motion and audio from 15 elite-level pianists playing 153 pieces of classical music.

RSS, 2024

Imitation learning from human hand motion data presents a promising avenue for imbuing robots with human-like dexterity in real-world manipulation tasks. Despite this potential, substantial challenges persist, particularly with the portability of existing hand motion capture (mocap) systems and the difficulty of translating mocap data into effective control policies. To tackle these issues, we introduce DexCap, a portable hand motion capture system, alongside DexIL, a novel imitation algorithm for training dexterous robot skills directly from human hand mocap data.

*Equal contribution

RSS, 2024

Abridged in ICRA 2024 workshops: ViTac, 3DVRM, and Future Roadmap for Manipulation Skills

Tactile feedback is critical for understanding the dynamics of both rigid and deformable objects in many manipulation tasks, such as non-prehensile manipulation and dense packing. We introduce an approach that combines visual and tactile sensing for robotic manipulation by learning a neural, tactile-informed dynamics model. Our proposed framework, RoboPack, employs a recurrent graph neural network to estimate object states, including particles and object-level latent physics information, from historical visuo-tactile observations and to perform future state predictions.

*Equal contribution

The International Journal of Robotics Research (IJRR)

Modeling and manipulating elasto-plastic objects are essential capabilities for robots to perform complex industrial and household interaction tasks (e.g., stuffing dumplings, rolling sushi, and making pottery). However, due to the high degrees of freedom of elasto-plastic objects, significant challenges exist in virtually every aspect of the robotic manipulation pipeline, e.g., representing the states, modeling the dynamics, and synthesizing the control signals. We propose to tackle these challenges by employing a particle-based representation for elasto-plastic objects in a model-based planning framework.

*Equal contribution

CoRL, 2023

Best System PaperHumans excel in complex long-horizon soft body manipulation tasks via flexible tool use: bread baking requires a knife to slice the dough and a rolling pin to flatten it. Often regarded as a hallmark of human cognition, tool use in autonomous robots remains limited due to challenges in understanding tool-object interactions. Here we develop an intelligent robotic system, RoboCook, which perceives, models, and manipulates elasto-plastic objects with various tools.

*Equal contribution

RSS, 2022

Abridged in ICRA 2022 workshop on Representing and Manipulating Deformable Objects

Covered by [MIT News] [Stanford HAI] [New Scientist]

Modeling and manipulating elasto-plastic objects are essential capabilities for robots to perform complex industrial and household interaction tasks. However, significant challenges exist in virtually every aspect of the robotic manipulation pipeline, e.g., representing the states, modeling the dynamics, and synthesizing the control signals. We propose to tackle these challenges by employing a particle-based representation for elasto-plastic objects in a model-based planning framework.

ICRA, 2021

In this work, we present a per-instant pose optimization method that can generate configurations that achieve specified pose or motion objectives as best as possible over a sequence of solutions, while also simultaneously avoiding collisions with static or dynamic obstacles in the environment.

Teaching

- Teaching Assistant, Stanford CS 231N, Spring 2022, Spring 2023

- Teaching Assistant, Stanford CS 109, Fall 2021, Winter 2022

- Peer Mentor, UW-Madison CS 559, Spring 2020

Talks

- [2024/01/10] Invited Talk at TechBeat [Recording in Chinese]

- [2024/01/06] Invited Talk at CAAI [Recording in Chinese]

- [2023/05/28] Invited Talk at Tsinghua University

Professional Service

- Conference Reviewer: IROS (2023, 2024, 2025), CoRL (2023, 2024, 2025), ICRA (2025), ICLR (2025), RSS (2025), Humanoids (2025)

- Journal Reviewer: RA-L